Scala command line input should be shown automatically. Type the following command line: %SPARK_HOME%\bin\spark-shell

HOW TO INSTALL SPARK ON WINDOWS FULL

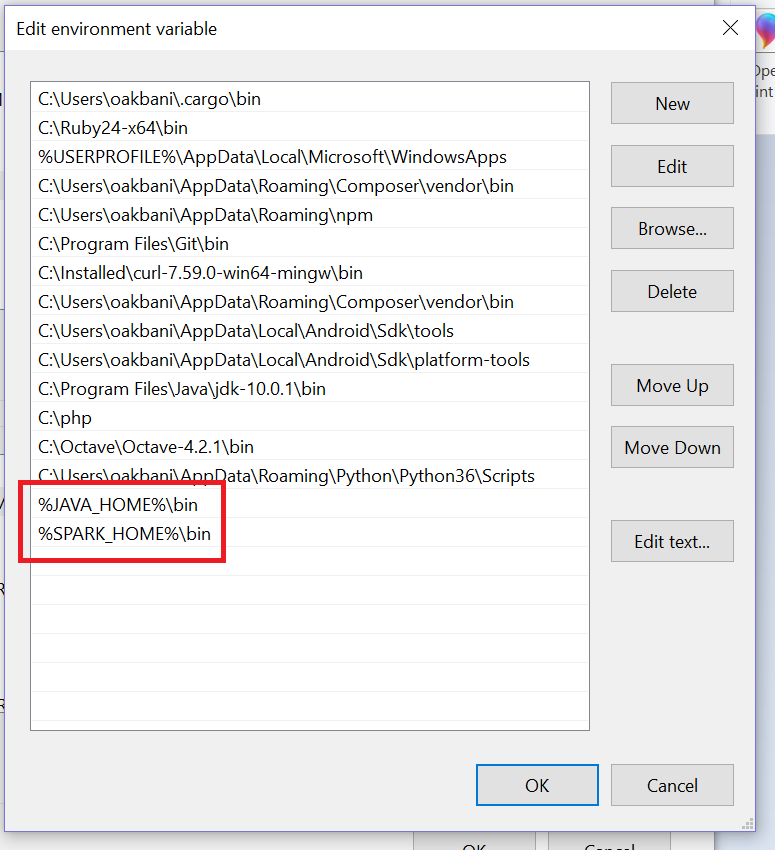

Using the executable that you downloaded, add full permissions to the file directory you created but using the unixian formalism: %HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/hive Using command line, create the directory: mkdir C:\tmp\hive Add the environment variable %HADOOP_HOME% which points to this directory, then add %HADOOP_HOME%\bin to PATH. Point SPARK_HOME on the extracted directory, then add to PATH: %SPARK_HOME%\bin ĭownload the executable winutils from the Hortonworks repository, or from Amazon AWS platform winutils.Ĭreate a directory where you place the executable winutils.exe.

So, although I'm not going to use Hadoop, I downloaded the pre-built Spark with hadoop embeded : spark-2.0.0-bin-hadoop2.7.tar.gz Trying to work with spark-2.x.x, building Spark source code didn't work for me.

Open in a browser to see the SparkContext web UI. Set SPARK_HOME and add %SPARK_HOME%\bin in PATH variable in environment variables. We will be using a pre-built Spark package, so choose a Spark pre-built package for Hadoop Spark download. Set HADOOP_HOME = > in environment variable. Since we don't have a local Hadoop installation on Windows we have to download winutils.exe and place it in a bin directory under a created Hadoop home directory. Install it and set SBT_HOME as an environment variable with value as >.ĭownload winutils.exe from HortonWorks repo or git repo. Install Python 2.6 or later from Python Download link.ĭownload SBT. Set SCALA_HOME in Control Panel\System and Security\System goto "Adv System settings" and add %SCALA_HOME%\bin in PATH variable in environment variables. You need to configure your environment variables, JAVA_HOME and PATH to point to the path of jdk. If you receive a message 'Java' is not recognized as an internal or external command. To test java installation is complete, open command prompt type java and hit enter.

0 kommentar(er)

0 kommentar(er)